The CLASSIFY Action

Classification requires version 1.0.5 or greater.

The CLASSIFY Action differs from Find.COLOR principally in that it finds the largest matches and not the matches with the best scores. The largest matches usually correspond to semantic objects and the CLASSIFY Action is usually concerned with finding objects.

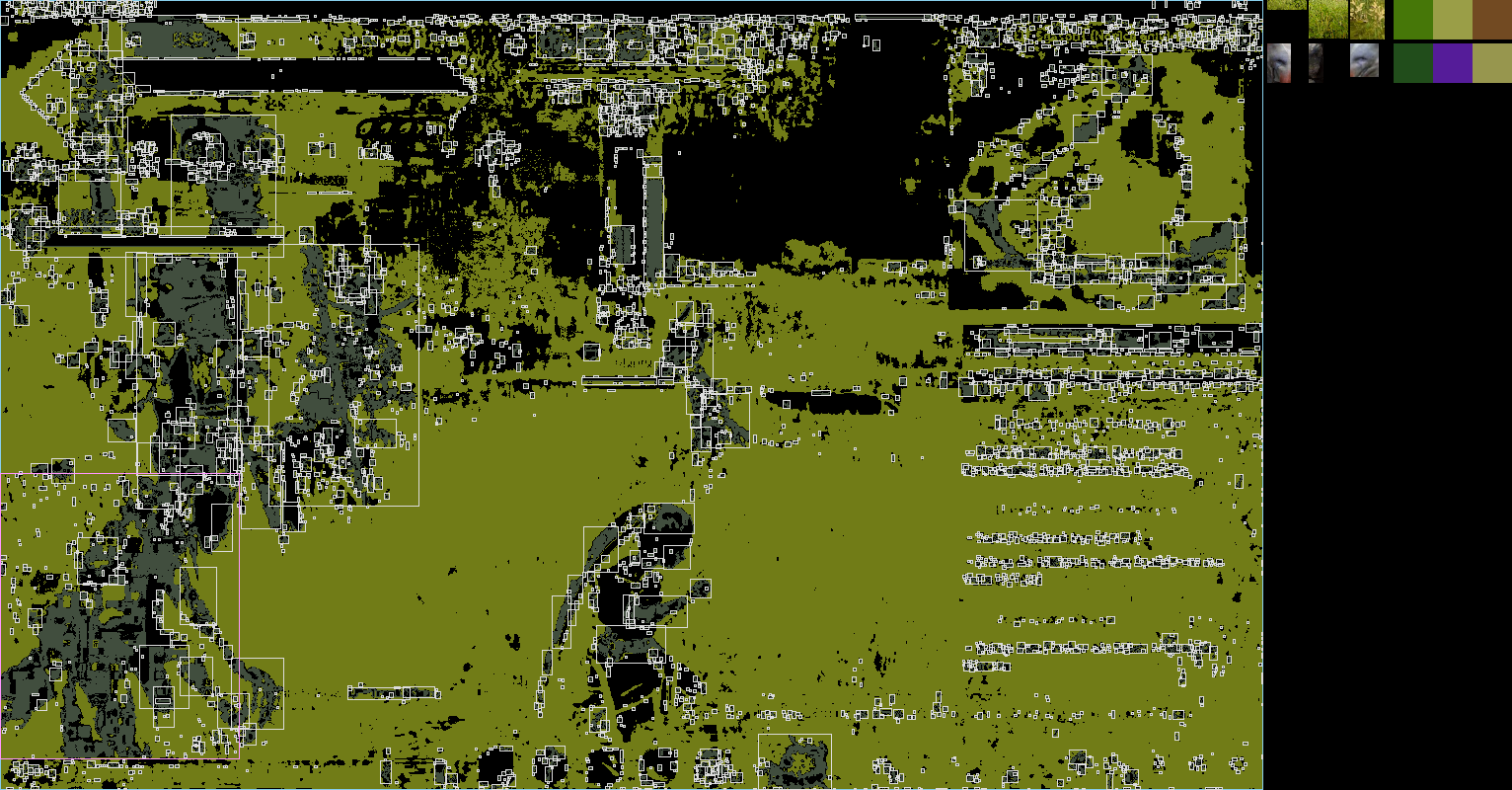

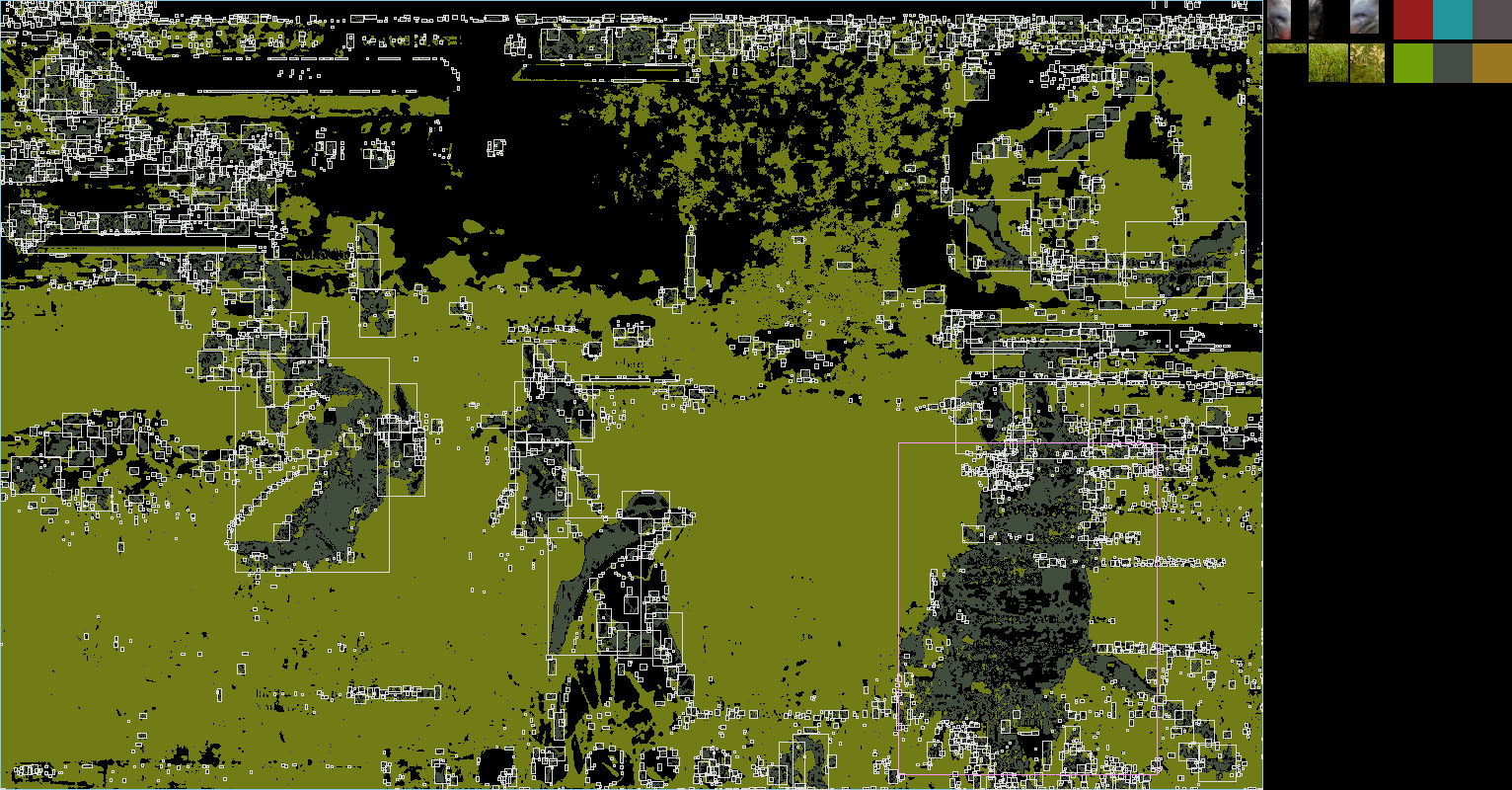

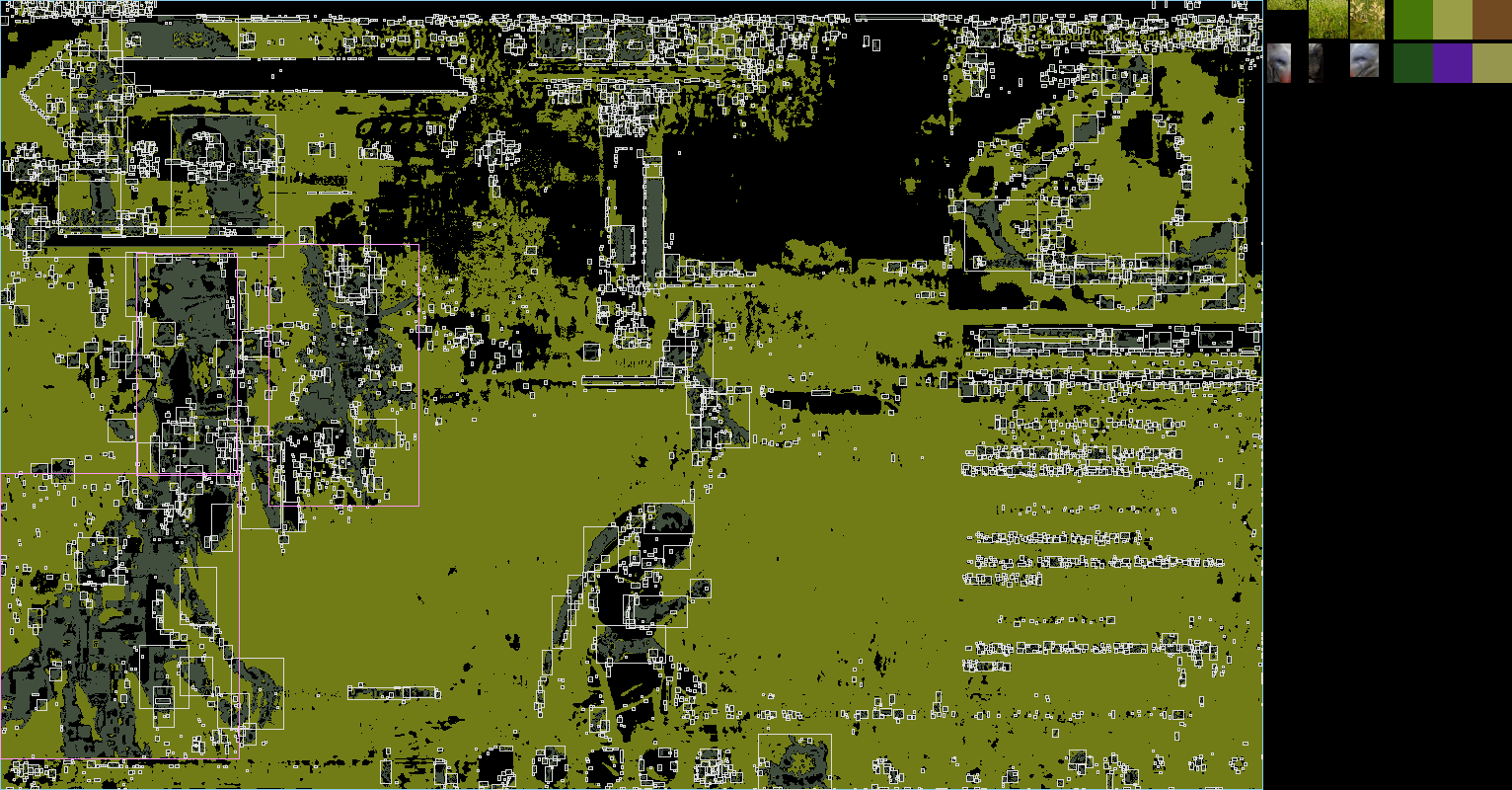

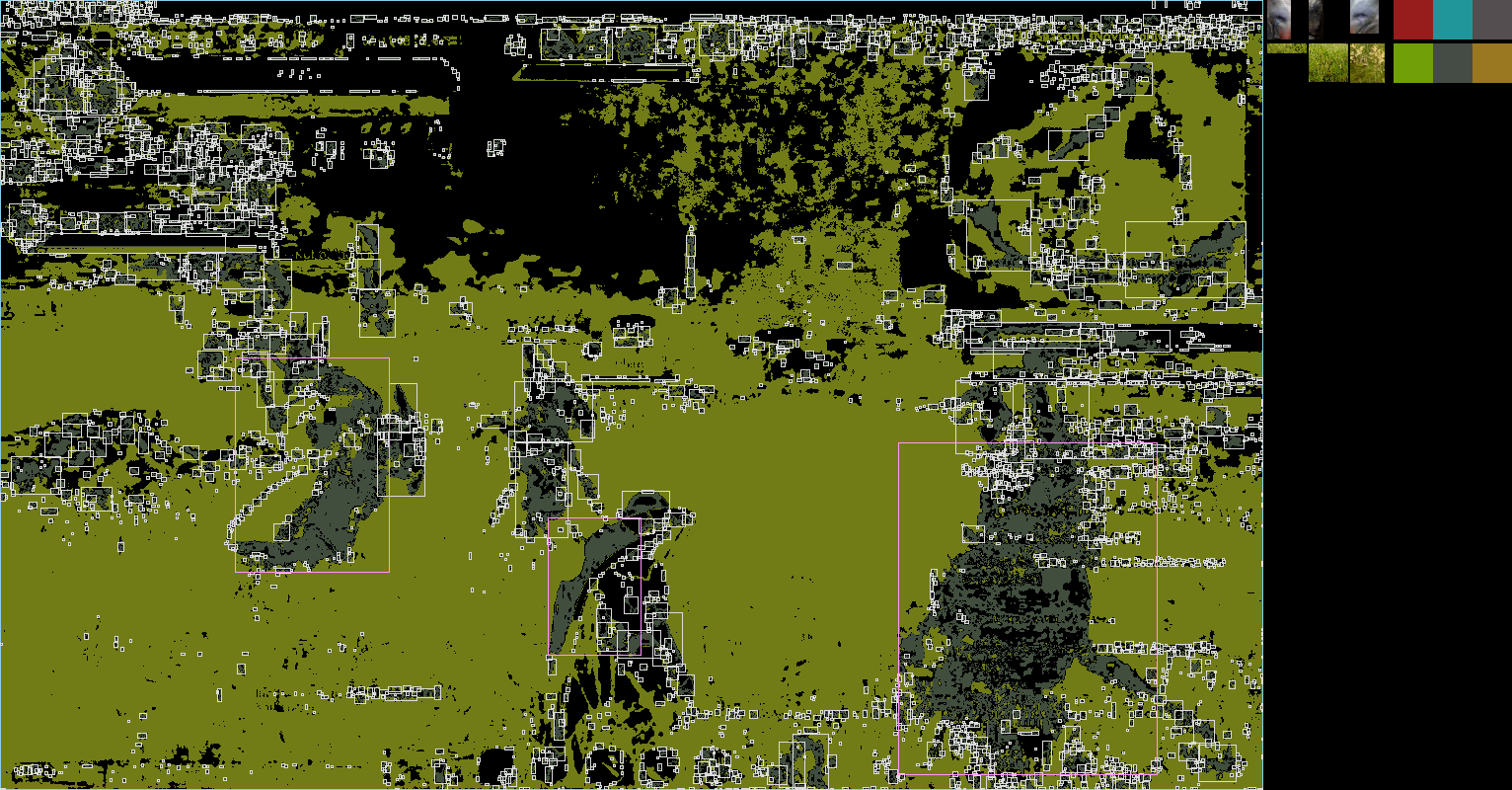

CLASSIFY saves two images to the history folder.

- The scene, with bounding boxes showing the matches and the search regions, and the contents of the matches displayed to the right of the scene in their own boxes.

- The scene displayed as classes. Each image is broken down into k-means color clusters, and the first of these clusters is chosen as the display color for the image. This display color appears on each pixel in the scene where the pixel belongs to this class (i.e. the pixel is most likely to belong to this image). Bounding boxes (in white) are drawn around clusters of pixels belonging to a target image's class as well as the search regions (light blue). The selected clusters have bounding boxes in pink. At the right of the scene, the Brobot images are shown with the contents of all of their image files, as well as the colors of the k-means clusters chosen. For example, if the k-means chosen was 3, the center colors of the 3 clusters are shown.

Example 1:

CLASSIFY with 1 target image, 1 background image, a k-means of 3, and 3 max matches.

BrobotSettings.saveHistory = true;

BrobotSettings.mock = true;

BrobotSettings.screenshot = "kobolds1.png";

ActionOptions findClass = new ActionOptions.Builder()

.setAction(ActionOptions.Action.CLASSIFY)

.setMaxMatchesToActOn(3)

.setKmeans(3)

.build();

ObjectCollection target = new ObjectCollection.Builder()

.withImages(classifyState.getKobold())

.build();

ObjectCollection additional = new ObjectCollection.Builder()

.withImages(classifyState.getGrass())

.build();

action.perform(findClass, target, additional);

This configuration gives us the following results:

If we change the scene to kobolds2.png, we get the following results:

Example 2:

To build a labeled dataset for localization, only the best match per scene is needed. If the game character stays in this position while screenshots are taken, a large dataset can be built without supervision. Other things could be done to improve the accuracy of the matches, including specifying search regions, using more images for classification, and placing the game character in an area with only grass.

ActionOptions findClass = new ActionOptions.Builder()

.setAction(ActionOptions.Action.CLASSIFY)

.setMaxMatchesToActOn(1)

.setKmeans(3)

.build();